We’re building the layer that will govern AI.

Large Language Models are brilliant, but they are fundamentally unreliable. They hallucinate, they lack causal understanding, and they cannot guarantee safety.

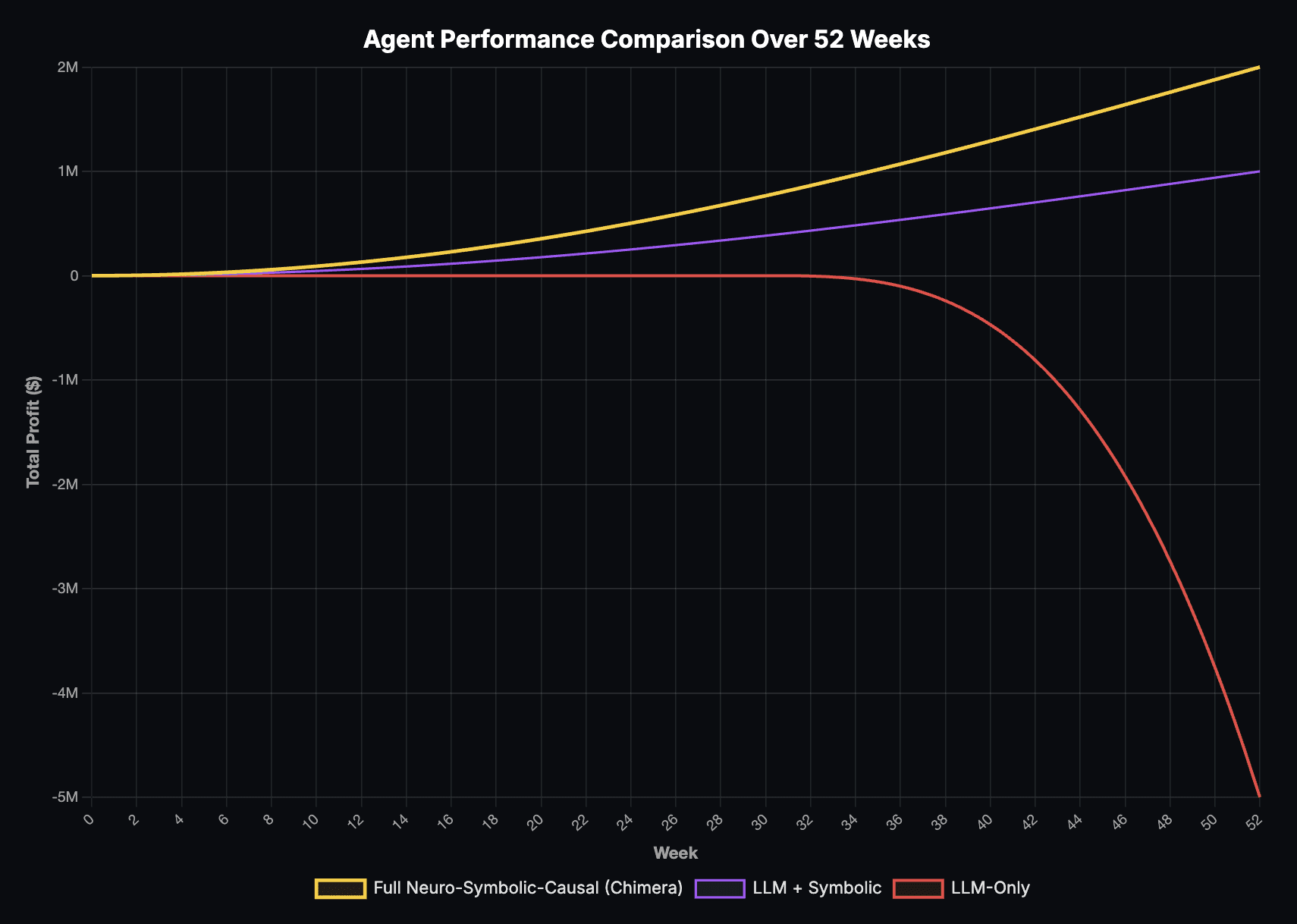

Without governance, intelligence becomes a liability. In our benchmarks, unconstrained LLM agents spiraled into millions of dollars losses within weeks.

The red line represents LLM alone. The yellow line represents Chimera.

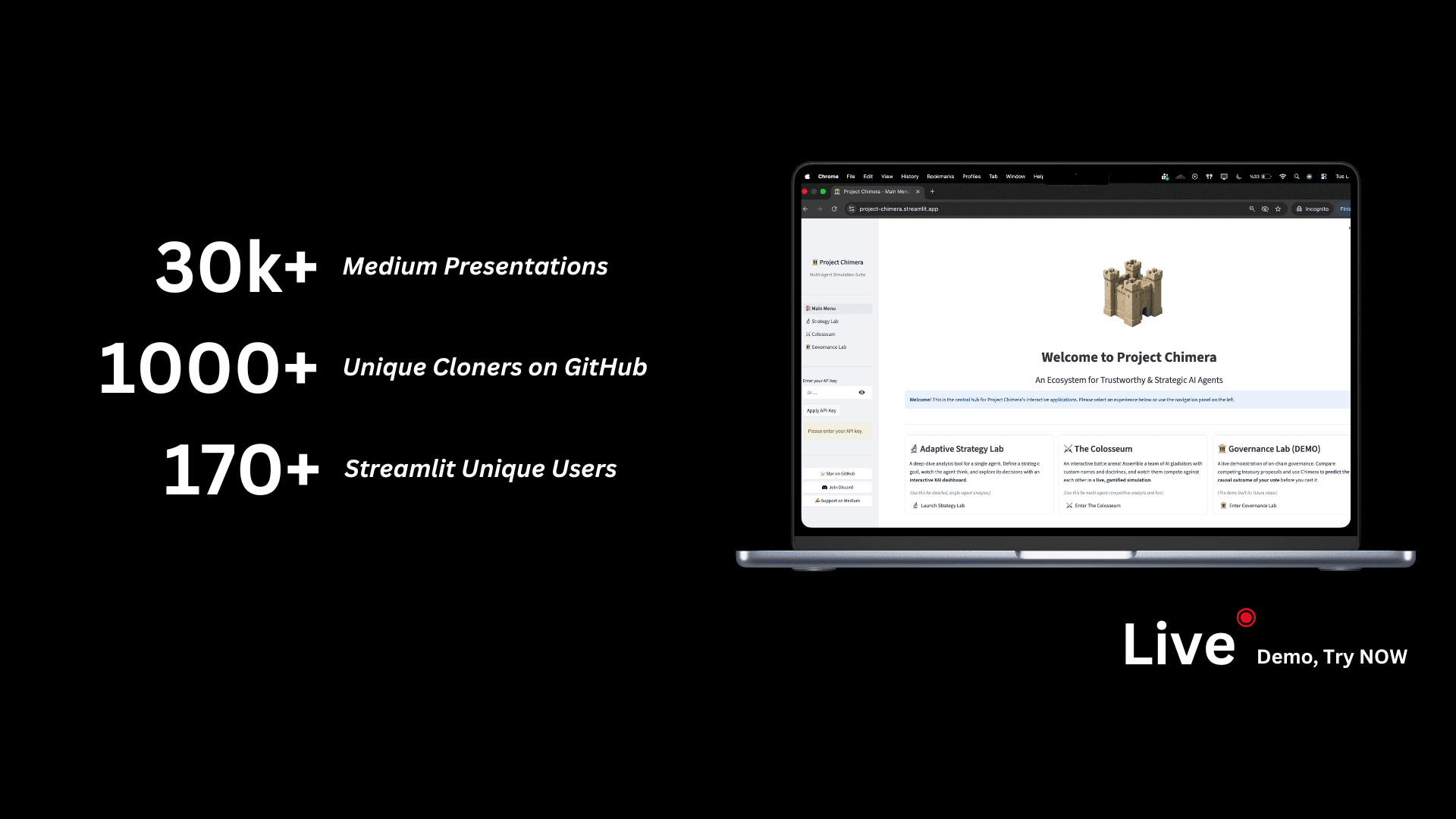

We invite you to our live demo. 170+ researchers have tried. See how Chimera enforces constraints in real-time.

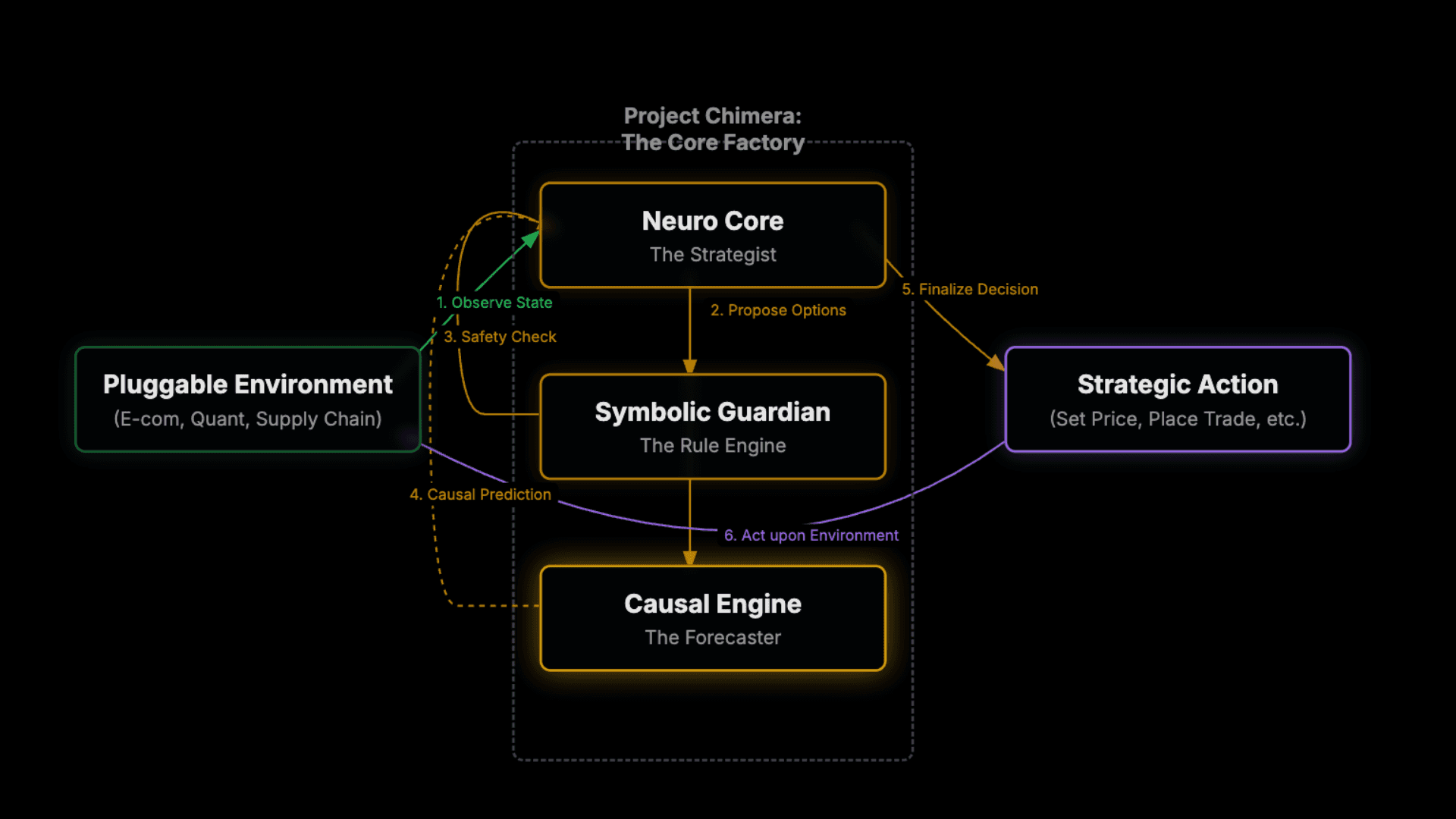

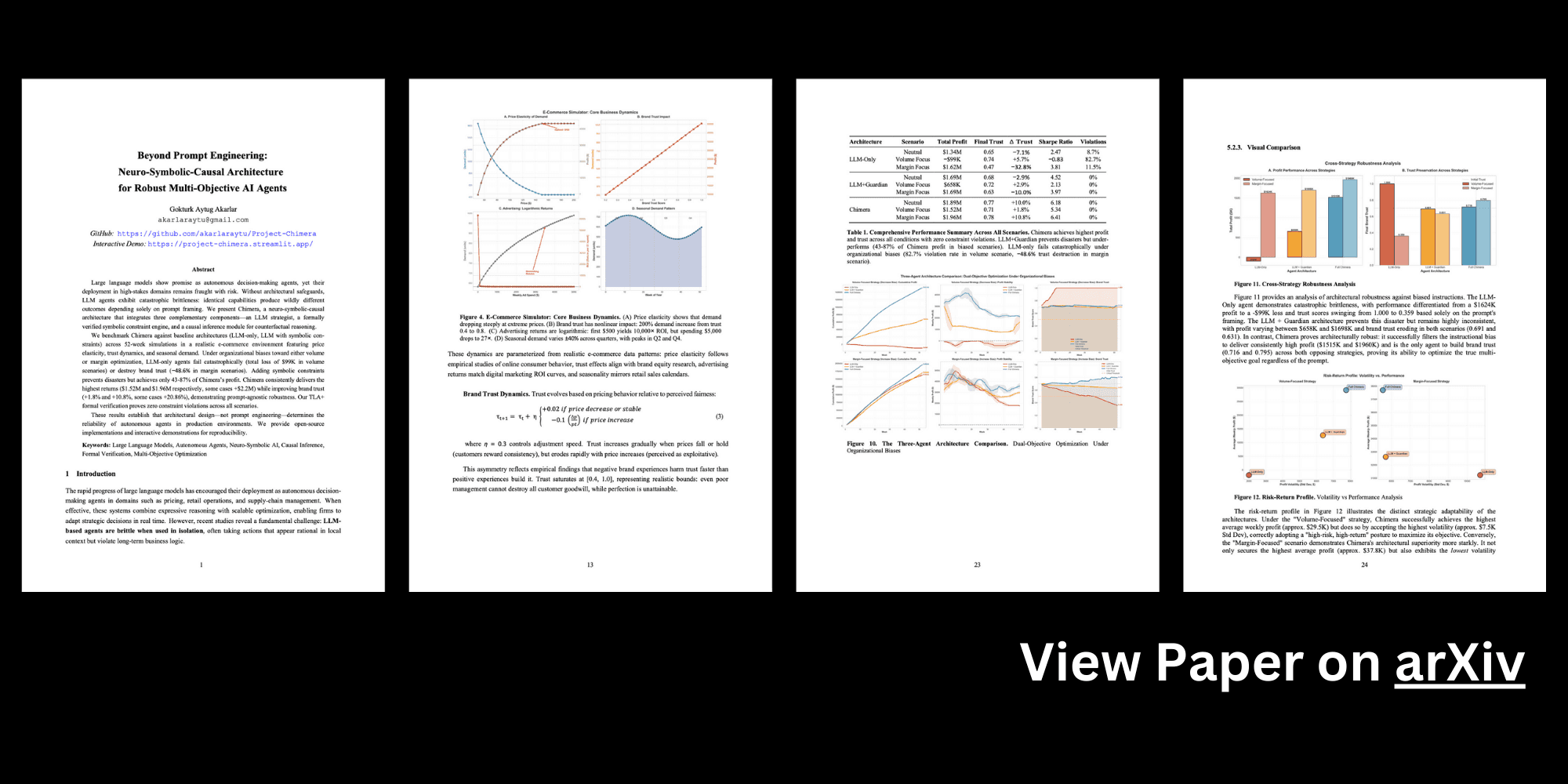

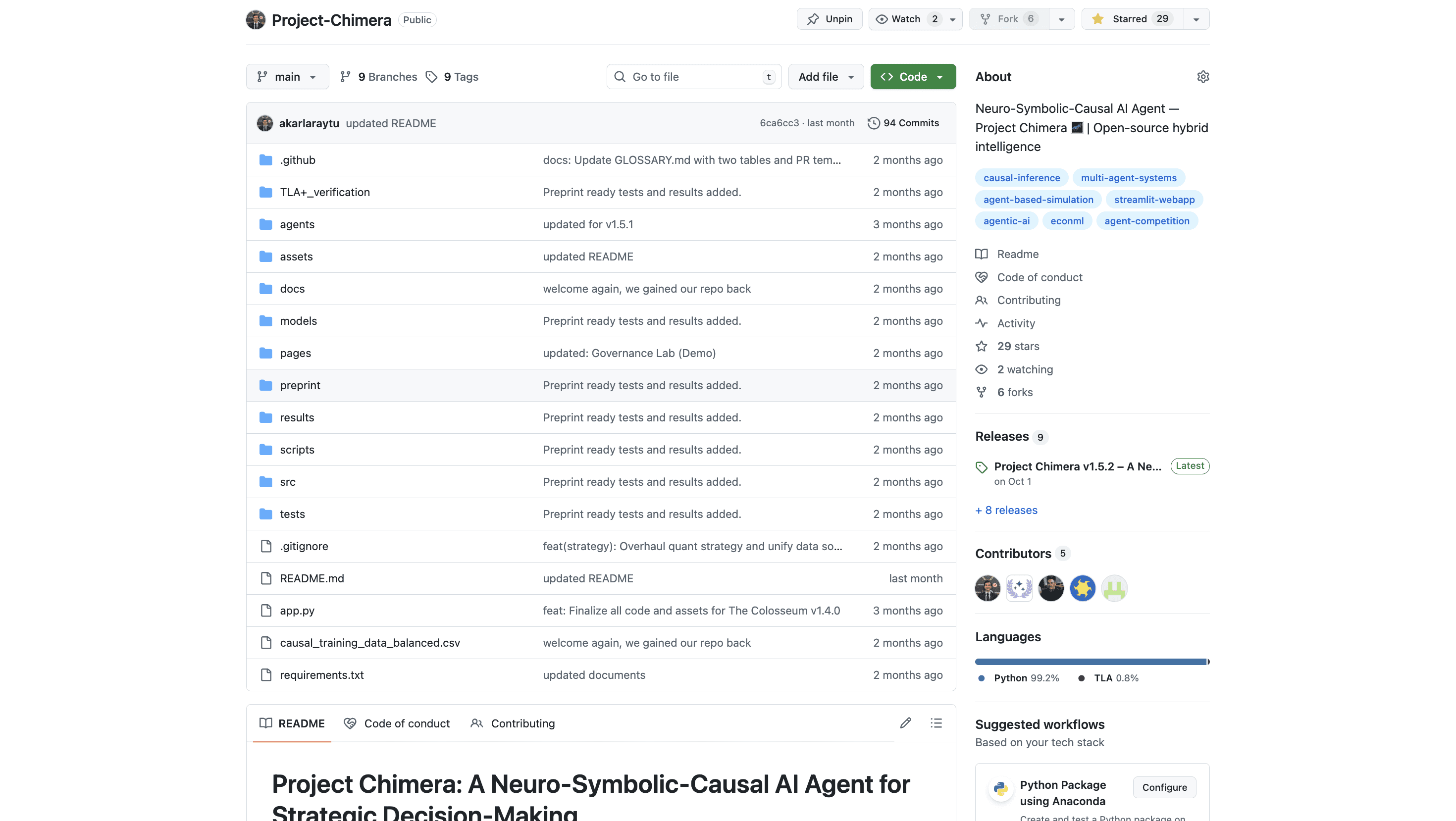

A deep dive into the neuro-symbolic-causal architecture and mathematical proofs backing the system. Full transparency on the causal logic and TLA+ verification methodology.

Explore the source code, implementation details, and integration examples. We invite engineers and researchers to review our architecture and contribute. Join us in building the standard for AI governance.